Server-side SEO

During the process of developing a website for your startup, you will need to make sure you have your server and hosting issues covered. Here are some considerations to watch out for leading up and after your launch.

1. Monitor site uptime: Use a free uptime monitoring tool such as Pingdom or UptimeRobot to verify that your site’s uptime is reasonable. In general, you should aim for an uptime of 99.999 percent. Dropping to 99.9 percent is sketchy, and falling to 99 percent is completely unacceptable. Look for web host uptime guarantees, how they will compensate you when those guarantees are broken, and hold them to their word with monitoring tools.

2. Switch to HTTPS: Set up HTTPS as early as possible in the process. The later you do this, the more difficult the migration will be. Verify that hypertext transfer protocol (HTTP) always redirects to hypertext transfer protocol secure (HTTPS), and that this never leads to a 404 page. Run a secure sockets layer (SSL) test to ensure your setup is secure.

3. Single URL format: In addition to making sure HTTP always redirects to HTTPS, ensure the www or non-www uniform resource locator (URL) version is used exclusively, and that the alternative always redirects. Ensure this is the case for both HTTP and HTTPS and that all links use the proper URL format and do not redirect.

4. Check your IP neighbors: If your internet protocol (IP) neighbors are showing webspam patterns, Google’s spam filters may have a higher sensitivity for your site. Use an IP neighborhood tool (also known as a network neighbor tool) to take a look at a sample of the sites in your neighborhood and look for any signs of spam. We are talking about outright spam here, not low-quality content. It is a good idea to run this tool on a few reputable sites to get an idea of what to expect from a normal site before jumping to any conclusions.

5. Check for malware: Use Google’s free tool to check for malware on your site.

6. Check for DNS issues: Use a DNS check tool such as the one provided by Pingdom or Mxtoolbox to identify any DNS issues that might cause problems. Talk to your webhost about any issues you come across here.

7. Check for server errors: Crawl your site with a tool such as Screaming Frog. You should not find any 301 or 302 redirects, because if you do, it means that you are linking to URLs that redirect. Update any links that redirect. Prioritize removing links to any 404 or 5xx pages, since these pages don’t exist at all, or are broken. Block 403 (forbidden) pages with robots.txt.

8. Check for noindexing and nofollow: Once your site is public, use a crawler to verify that no pages are unintentionally noindexed and that no pages or links are nofollowed at all. The noindex tag tells search engines not to put the page in the search index, which should only be done for duplicate content and content you don’t want to show up in search results. The nofollow tag tells search engines not to pass PageRank from the page, which you should never do to your own content.

9. Eliminate Soft 404s: Test a nonexistent URL in a crawler such as Screaming Frog. If the page does not show as 404, this is a problem. Google wants nonexistent pages to render as 404 pages; you just shouldn’t link to nonexistent pages.

Indexing

Run your site through the following points both before and after your startup goes live to ensure that pages get added to the search index quickly.

1. Sitemaps: Verify that an eXtensible markup language (XML) sitemap is located at example.com/sitemap.xml and that the sitemap has been uploaded to the Google Search Consoleand Bing Webmaster Tools. The sitemap should be dynamic and updated whenever a new page is added. The sitemap must use the appropriate URL structure (HTTP versus HTTPS and www versus non-www) and this must be consistent. Verify the sitemap returns only status 200 pages. You don’t want any 404s or 301s here. Use the World Wide Web Consortium (W3C) validator to ensure that the sitemap code validates properly.

2. Google cache: See Google’s cache of your site using an URL like:

http://webcache.googleusercontent.com/search?q=cache:[your URL here].

This will show you how Google sees your site. Navigate the cache to see if any important elements are missing from any of your page templates.

3. Indexed pages: Google site:example.com to see if the total number of returned results matches your database. If the number is low, it means some pages are not being indexed, and these should be accounted for. If the number is high, it means that duplicate content issues need to be alleviated. While this number is rarely 100 percent identical, any large discrepancy should be addressed.

4. RSS feeds: While rich site summary (RSS) feeds are no longer widely used by the general population, RSS feeds are often used by crawlers and can pick up additional links, useful primarily for indexing. Include a rel=alternate to indicate your RSS feed in the source code, and verify that your RSS feed functions properly with a reader.

5. Social media posting: Use an automatic social media poster, like Social Media Auto Publish for WordPress, for your blog or any section of your site that is regularly updated, as long as the content in that section is a good fit for social media. Publication to social media leads to exposure, obviously, but also helps with ensuring your pages get indexed in the search results.

6. Rich snippets: If you are using semantic markup, verify that the rich snippets are showing properly and that they are not broken. If either is the case, validate your markup to ensure there are no errors. It is possible that Google simply won’t show the rich snippets anyway, but if they are missing, it is important to verify that errors aren’t responsible.

Content

Put processes in place to ensure that the following issues are handled with each new piece of content you plan to create post-launch, and check each of these points on your site before you launch.

1. Missing titles: Use a crawler to verify that every page on your site has a title tag.

2. Title length: If you are using Screaming Frog, sort your titles by pixel length and identify the length at which your titles are getting cut off in the search results. While it is not always necessary to reduce the title length below this value, it is vital that all the information a user needs to identify the subject of the page shows up before the cutoff point. Note any especially short titles as well, since they should likely be expanded to target more long-tail search queries.

3. Title keywords: Ensure that any primary keywords you are targeting with a piece of content are present in the title tag. Do not repeat keyword variations in the title tag, consider synonyms if they are not awkward, and place the most important keywords closest to the beginning if it is not awkward. Remember that keyword use should rarely trump the importance of an appealing title.

4. Meta descriptions: Crawl your site to ensure that you are aware of all missing meta descriptions. It is a misconception that every page needs a meta description, since there are some cases where Google’s automated snipped is actually better, such as for pages targeting long-tail. However, the choice between a missing meta description and a present one should always be deliberate. Identify and remove any duplicate meta descriptions. These are always bad. Verify that your meta descriptions are shorter than 160 characters so that they don’t get cut off. Include key phrases naturally in your meta descriptions so that they show up in bold in the snippet. (Note that 160 characters is a guideline only, and that both Bing and Google currently use dynamic, pixel-based upper limits.)

5. H1 headers: Ensure that all pages use a header 1 (H1) tag, that there are no duplicate H1 tags, and that there is only one H1 tag for each page. Your H1 tag should be treated similarly to the title tag, with the exception that it doesn’t have any maximum length (although you shouldn’t abuse the length). It is a misconception that your H1 tag needs to be identical to your title tag, although it should obviously be related. In the case of a blog post, most users will expect the header and title tag to be the same or nearly identical. But in the case of a landing page, users may expect the title tag to be a call to action and the header to be a greeting.

6. H2 and other headers: Crawl your site and check for missing H2 headers. These subheadings aren’t always necessary, but pages without them may be walls of text that are difficult for users to parse. Any page with more than three short paragraphs of text should probably use an H2 tag. Verify that H3, H4, and so on are being used for further subheadings. Primary subheadings should always be H2.

7. Keywords: Does every piece of content have a target keyword? Any content that does not currently have an official keyword assigned to it will need some keyword research applied.

8. Alt text: Non-decorative images should always use alt-text to identify the content of the image. Use keywords that identify the image itself, not the rest of the content. Bear in mind that the alt-text is intended as a genuine alternative to the image, used by visually impaired users and browsers that cannot render the image. The alt-text should always make sense to a human user. Bear in mind that alt-text is not for decorative images like borders, only for images that serve a use as content or interface.

Site architecture

It’s always best to get site architecture handled as early on in the launch process as possible, but these are important considerations you need to take into account even if you have already launched.

1. Logo links: Verify that the logo in your top menu links back to the homepage, and that this is the case for every section of your site, including the blog. If the blog is its own mini-brand and the logo links back to the homepage of the blog, ensure that there is a prominent homepage link in the top navigation.

2. Navigational anchor text: Your navigational anchor text should employ words for your target keyword phrases, but should be short enough to work for navigation. Avoid menus with long anchor text, and avoid repetitious phrasing in your anchor text. For example, a dropdown menu should not list “Fuji apples, Golden Delicious apples, Granny Smith apples, Gala apples” and so on. Instead, the top menu category should be “Apples,” and the dropdown should just list the apples by type.

3. External links: Links to other sites in your main navigation, or otherwise listed on every page, can be interpreted as a spam signal by the search engines. While sitewide external links aren’t necessarily a violation of Google’s policies on link schemes, they can resemble the “Low quality directory or bookmark site links,” and Google explicitly calls out “Widely distributed links in the footers or templates of various sites.” It’s also crucial that any sponsored links use a nofollow attribute and a very good idea to nofollow your comment sections and other user-generated content.

4. Orphan pages: Cross reference your crawl data with your database to ensure that there are no orphan pages. An orphan page is a URL that is not reachable from any links on your site. Note that this is different from a 404 page, which simply does not exist but may have links pointing to it. Aside from these pages receiving no link equity from your site, they are unlikely to rank. Orphan pages can also be considered “doorway pages” that may be interpreted as spam. If you do not have access to database information, cross reference crawl data with Google Analytics.

5. Subfolders: URL subfolders should follow a logical hierarchy that matches the navigational hierarchy of the site. Each page should have only one URL, meaning that it should never belong to more than one contradicting category or subcategory. If this is unfeasible for one reason or another, ensure that canonicalization is used to indicate which version should be indexed.

6. Link depth: Important pages, such as those targeting top keywords, should not be more than two levels deep, and should ideally be reachable directly from the homepage. You can check for link depth in Screaming Frog with “Crawl depth.” This is the number of clicks away from the page you enter as the start of your crawl.

7. Hierarchy: While pages should be accessible from the homepage within a small number of clicks, this does not mean that your site should have a completely flat architecture. Unless your site is very small, you don’t want to be able to reach every page directly from the homepage. Instead, your main categories should be reachable from the homepage, and each subsequent page should be reachable from those category pages, followed by subcategories, and so on.

8. No JavaScript pagination: Every individual piece of content should have an individual URL. At no point should a user be able to navigate to a page without changing the browser URL. In addition to making indexation very difficult or impossible for search engines, this also makes it impossible for users to link directly to a page they found useful.

9. URL variables: URL variables such as “?sort=ascending” should not be tacked onto the end of URLs that are indexed in the search engines, because they create duplicate content. Pages containing URL variables should always canonicalize to pages without them.

10. Contextual linking: Google has stated editorial links embedded in the content count more than links within the navigation. Best practice suggests adding descriptive text around the link, your site’s internal links will pass more value if you include contextual links. In other words, internal linking within the main body content of the page is important, particularly for blog and editorial content. Even product pages should ideally have recommendation links for similar products.

Mobile

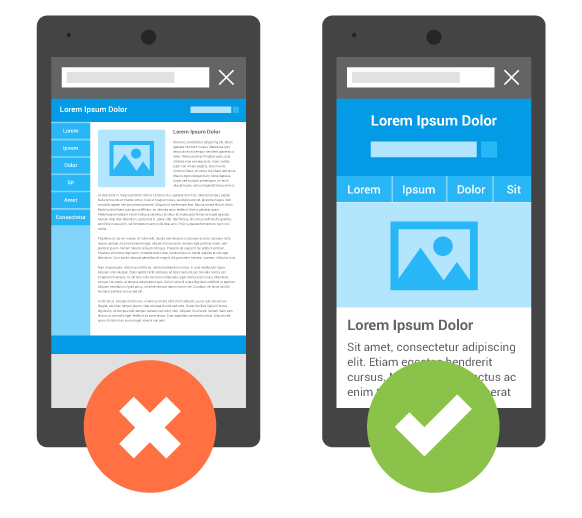

Virtually every modern startup should start right off the bat with a mobile-friendly interface and infrastructure. Check for and implement the following as early on as possible.

1. Google Mobile-friendly test: Run the Google Mobile-friendly test to identify any issues that Google specifically finds with how users will experience your site on mobile.

2. Implement responsible design: Your site should be responsive, meaning that it will function properly and look aesthetic to users no matter what device they are accessing your site from. If this is outside your wheelhouse, look for a theme labeled “responsive template.” Responsive themes are available for nearly all platforms, and some free options are almost always available. Be sure to eliminate any extraneous visual elements that are unnecessary to see from a mobile device. Use in your CSS to block these elements.media rules.

3. JavaScript and Flash: Verify that your pages work fine without JavaScript or Flash. Use your crawler or database to identify pages that reference small web format (.swf) and JavaScript (.js) files and visit these pages using a browser with JavaScript disabled and no Adobe Flash installed. If these pages are not fully functiona,l they will need to be reworked. Flash in general should be entirely replaced with cascading style sheets (CSS). JavaScript should only be used to dynamically alter HTML elements that are still functional in the absence of JavaScript.

4. Responsive navigation: Verify that your drop-down menus are functional on mobile devices and that the text width doesn’t make them unattractive or difficult to use.

5. Responsive images: Even some responsive themes can lose their responsiveness when large images are introduced. For example, placing the following code between your tags will ensure that images size down if the browser window is too small for the image:

6. Responsive videos and embeds: Videos, and especially embeds, can really bungle up responsive themes. For example, if you are using the HTML video tag, placing this code between your tags will cause your videos to scale down with the browser window:

Read the full article at Search Engine Land.